Community Datasets in Google Earth Engine: An experiment

Over the last couple of years, Google Earth Engine has gone from being an experiment to support simple raster operations to being one of the most widely used remote sensing software that allows for massively scaling analysis and for asking and making sure that more users have the same experience with analysis no matter where they are.

The idea that data lives and is analyzed using Google’s back end meant access was becoming easier and so was repeatability. One of the key features among the many others that keep Earth Engine users coming back for more, is the constant iteration that occurs and the sheer ingenuity of the community base, whether it is part of our Google groups or followups on virtual meetings.

So when someone posted asking about Facebook’s High-Resolution Settlement I was curious to learn more and wanted to treat it like a community problem. This layer as a dataset in Google Earth Engine currently not a part of the Catalog and so it made for a perfect setup to experiment with community curated datasets, not a new concept but always fun to experiment with.

This project started with an email message about Facebook’s High-Resolution Settlement Layer generated at a resolution of about 30m for population density. This was part of their open datasets and was distributed under the Creative Commons International License. This dataset was hosted and shared by the Center for International Earth Science Information Network at Columbia University.

As the datasets grew larger and as facebook started adding more countries this was also migrated to the Humanitarian Data Exchange as the datasets grew and got constantly updated.

Putting the pieces together: A to-do list

So things I needed to do visit the Humanitarian Data Exchange website. You can sign up for an account and register your organization to share and access data. The registration also allows you to generate an API key to interact with their API, you can find the information here. I am sure if I spent more time I might have figured out the neater way to use the hdx-python-api. The approach takes into account that inherently the webpage can be scraped for the URL links associated with Facebook as the organization providing this dataset.

The next step is to filter this further and selecting zipped geotiff this allows you to target and increase the page size to a much larger range to about 250. So we get a URL that takes into account the organization, the page size and the dataset types

URL: https://data.humdata.org/organization/facebook?res_format=zipped%20geotiff&q=&ext_page_size=250

The URL allows you to pick and filter for both extensions and for the page size and look at the population datasets. For now, most of the datasets uploaded by Facebook are population datasets so this was the entry point.

Building a tool

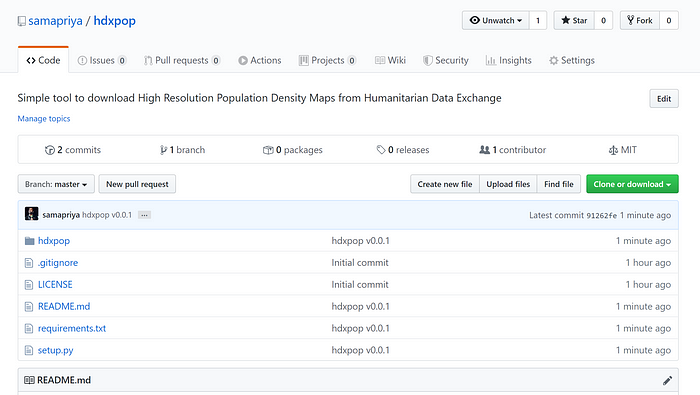

If you want to try this out, you can go to the GitHub page here and download the repo to download these datasets locally.

If you would like to skip the method, I have already ingested these datasets into Google Earth Engine and you can access the script and datasets using this link. The overall description for the collection is included and is as below from the Humanitarian Data Exchange site.

To reference this data, please use the following citation: Facebook Connectivity Lab and Center for International Earth Science Information Network - CIESIN - Columbia University. 2016. High Resolution Settlement Layer (HRSL). Source imagery for HRSL Copyright 2016 DigitalGlobe. Accessed DAY MONTH YEAR. Data shared under: Creative Commons Attribution International. You can get methodology here https://dataforgood.fb.com/docs/methodology-high-resolution-population-density-maps-demographic-estimates/and step by step download here

https://dataforgood.fb.com/docs/high-resolution-population-density-maps-demographic-estimates-documentation/

The tool is set up to do two primary things,

Download the zipped datasets

Unfortunately, the file names for these zipped files are not consistent so while some end with _geotiff.zip some just end with a .zip. So the first tool searches using the URL we retrieved, though we fixed the URL for this purpose, once can change that to modify and reapply this tool to other datasets.

Setting up the download is fairly simple and only requires a destination folder. The tool automatically scans for already downloaded images and skips and continues if you need to interrupt the download process.

The tool also skips existing files and handles a keyboard interruption gracefully. The main URL is used to further request all files for each country/location and searches are made for population datasets. The tool might take a bit of time depending on the datasets so go stretch if you want. I am sure this can be optimized further.

Let’s Unzip and Ship

The next tool is to simply unzip only the TIF files to a destination folder. Again skips existing files.

Shipping to Google Earth Engine

There are multiple ways to ingest these images into Google Earth Engine, you can ingest them into your GCS bucket and ingest from there. For this I used another tool I wrote called geeup. The readme file allows for these steps. The metadata for these datasets are kept simple, the resolution is 30m and the dates from the zip files are assumed and set as system:time_start for filtering if needed. Use the upload methodology that works for you.

There you have it, the dataset is now part of a shared dataset for others to use. The whole ecosystem for Earth Engine was built around community learning and adaptation and iteration of codes that often then become standard and available tools in the Earth Engine toolbox. It made sense that a community that builds and makes everything from its own apps to open source integration software to code scripts and hackathons with Google Earth Engine to curate some beneficial collections for broader use as bridging mechanisms.

For now, this is an experiment with community datasets and open designs using tools and methods. Truly having access to data allows users to utilize and ask questions we often don’t think about. Here’s hoping to some good use, and if you enjoyed the article feel free to star the GitHub repo to continue the experiment.